Mechanistic Interpretability

Mechanistic interpretability seeks to reverse engineer neural networks, similar to how one might reverse engineer a compiled binary computer program. After all, neural network parameters are in some sense a binary computer program which runs on one of the exotic virtual machines we call a neural network architecture.

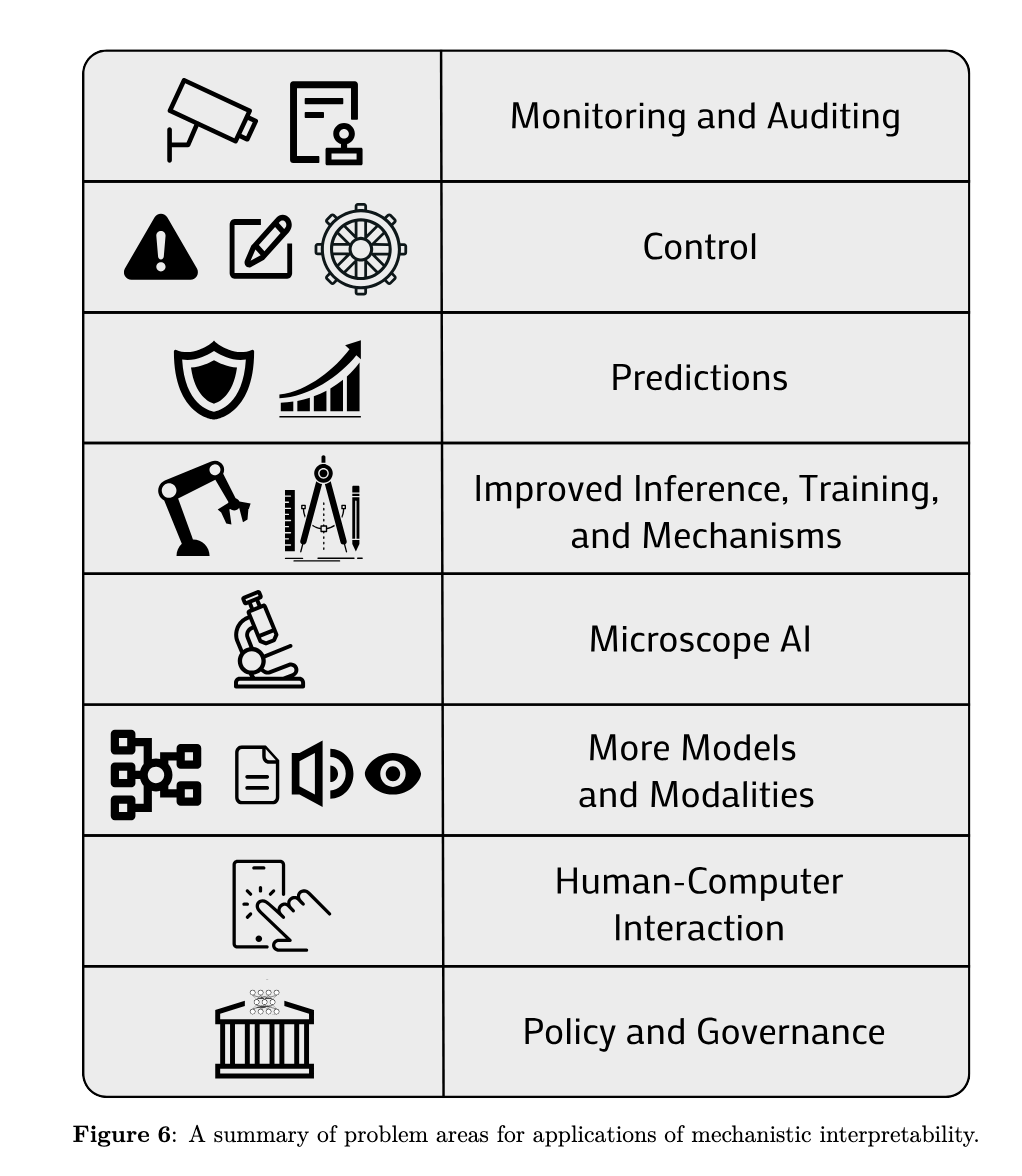

Where does it fit into the broader picture of alignment?

1. Core Content and Discussions

1.1 Introduction to Mechanistic Interpretability (15 minutes)

1.2 Zoom In: An Introduction to Circuits (30 minutes)

1.3 Let’s Try To Understand AI Monosemanticity (25 minutes)

- Check out Toy Model of Superposition

- Check out Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

1.4 (Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet) (10 minutes)

- Scaling Laws section here

- Examples of Feature Interpretability here

- Feature Visualization